Introduction

Shortenr is a URL shortener application that allows both registered users and unregistered users to create a short alias for a long URL link.

Shortnr is built with a robust analytics feature that allows link owners to track individual links to analyze audience, and measure campaign performance.

The analytics we tracked include, the per day, per week, per hour, and per month visit count of each link as well as the country, device, os, and browser of the users accessing the links. We also track the website that referred the link back to our app.

Features

Authentication system including a Sign-up, Email verification, login, and Logout features as well as an option for users to delete their account.

URL Shortening for both registered and unregistered users

URL redirection analytics including the:

• Country where the link was accessed from

• Browser it was accessed with

• Device the link was accessed from

• Operating system of the device the link was accessed with.

• Website the link was referred from.

• Per day, Per month, Per week, and hour view counts

Only per month, per day and total view counts have been rendered in the frontend

Free publicly accessible API

Real-world uses

Asides from the general convenience that shortenr provides by creating a short link for an inconvenient long link, The analytics features provides multiple insight about the people that access links.

The Country analytic feature provides a demographic analytics about the users that access the link. With this feature, Blog owners for instance can get the demographic analytics of their visitors if they shorten the link with our app.

The Device related analytics like the OS and Device product is more useful for people that sells gadget and gadget accessories but are not limited to them.

With the insight that our application provides about the device and operating system of the people that access the link, gadget accessories sellers can have an idea about the market demand of their customers. This is one of the various use cases of this analytic.

The Referrer Analytics gives you the performance of a campaign or advert you set on different platforms like Facebook, Twitter, Linkedln and even WhatsApp. All in one place.

The Date and Time analytics has numerous purposes that I can't iterate enough here.

The beautiful part of the Date and Time analytics is that it takes all timezones into consideration.

........So much for the six letters we give you😊

Meet the team

Shortenr is a project built by the collaboration of a Backend guy and a Frontend guy. The rest of this post will be based on the point of view (POV) of each team.

Meet the Backend Guy

My name is Ahmad, I am a technical writer as well as a backend developer that works with Django, Django Rest Framework, and Django Channels.

Meet the frontend Guy

Hi! My name's Abass. I am a frontend developer who works with React, TailwindCSS and Next JS.

Tech Stack

Backend Tech stack

Ahmad's POV

while building this project, I used the following tools:

- Django

- Django Rest Framework to build the API

- Redis for message broker for celery workers

- Planetscale for database

- Celery for a long-running task

- Heroku for deployment

- Github for version control

Frontend Tech Stack

Abass's POV

Tools I used during the course of this project include:

- Next JS framework for page routing.

- React Context for global state management.

- Tailwind CSS for styling.

- Eruda to access mobile browser's console [ was this necessary :(? Yh, It was. Read on and see :) ]

- Vercel for Deployment

- GitHub for Version Control.

Challenges

Ahmad POV

There following are some of the challenges that I faced when building the backend.

Building the Analytics part as well as deploying.

Tracking those analytics was easy, however, performing annotation and aggregation on the analytics and sending them with the payload was tough for me.

The deploying part was the main deal. I initially deployed on Digital ocean's ubuntu server. I deployed with Nginx and Gunicorn. It was my first time doing that, so it took me almost a day trying to get it all working. However, I had to redeploy on Heroku for reasons we will be discussing in the FrontEnd vs Backend challenges.

Deploying on Heroku was not expected to be as hard as on Digital Ocean because I have used Heroku multiple times. However, things took a different turn that night because of an import error. A very small insignificant error that took about two hours plus of my time to fix!

I always split my Django configuration to take into consideration the environment I am working on. I have the development.py file that holds all the configuration related to the development environment, The production.py file for the production environment, and the base.py file for configuration related to both.

While importing the configurations of the base.py into the production.py file, I imported the base.py file itself instead of the configurations.

from. import base

Instead of :

from .base import *

The first import will require me to prepend each configuration with base. and Heroku doesn't seem to allow that. I couldn't figure it out earlier because the app was deploying successfully but not starting. The error message in the Heroku log was not that helpful either.

One of the challenges I also faced was associated with using Planetscale. The first of which was finding a community group for Planetscale users. The other challenge was implementation-related. I needed a database field that allows more than 255 characters for users to submit their long URLs. As expected, a TextField should work, but for some reasons I don't understand, the TextField was being represented as Varchar (255) on my Planetscale database schema.

I scheduled a call with the team on Planetscale. A team including Ethane, Sara, and Savanna. I discussed my experience with using the database with them and told them some of my challenges. They were very supportive, gave me the link to the user community as well as made some suggestions regarding branching and deploying the schema of the database to take effect in the production database.

The last and the greatest challenge of it all was putting timezone into consideration. Since some of our analytics are time-based we had to put timezone into consideration and if you have worked with timezone before, you can testify how difficult it can be because things can go out of hand fast, and also there is a wide range of things to take into consideration.

In Shortenr, a link can be created by a user in a timezone and there is a possibility that the link can be accessed by another user at a different timezone. In the initial implementation, the timezone where the user access the link was saved. This leads to some discrepancies like if the link was accessed in a timezone that is behind the timezone it was created. In that case, the date created was ahead of the last visited date which doesn't make sense😂. What we did to solve this was to save all date time-related data in the UTC timezone and save the timezone of the user that can create and track analytics of a link at sign up. The date time data are then converted from UTC to the link owners' timezone during payload rendering.

Abass POV:

Major challenges I faced during the course of this project came about when I tried:

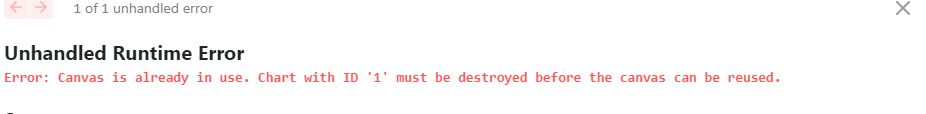

- Creating charts without destroying previous instances ( solved this using StackOverflow & Chart.js docs)

- Using the javascript .at() method ( solved this with the help of Eruda ;) )

Creating charts without destroying previous instances

Being my first time working with charts, I was faced with an error of trying to rerender charts without destroying previous instance unknowingly.

At first, I abstracted the logic for generating a chart into a helper function ( buildChart ) that takes a config object as a parameter and using the properties of this config object, creates a chart.

This function was able to create different charts ( pie charts and line charts specifically ) based on the config object it takes. No more, no less.

Unfortunately, an error comes up when the function gets called again ( this function gets called for each chart that's rendered ) after its initial call.

After much toing and froing between Stackoverflow and Chart.js documentation, I was able to come up with a solution. I attached charts variable to the global windows object. The charts variable is an array to hold each instance of the Chart class ( imported from the Chart.js library ) created at an index that corresponds with an id passed to the buildChart function. Yes, in addition to the config object, buildChart had to take another parameter - id. Each chart on the analytics page of shortenr has an id that corresponds with the index where it is stored in the charts variable.

On calling the buildChart function, a running instance of a particular chart is checked for in charts using its id. If this instance exists, it is destroyed before storing a new Chart instance in this id, else storing is done directly. Trust me, it wasn't easy for me to come up with this at first as easy as it might appear. Sort of a cleaning-up operation to me, it seems.😃

Using javascript .at() method.

Found myself in the so-called 'It works on my machine' situation when Ahmad's browser awakened a sleeping bug ( maybe😭😭 )

while trying to access the analytics page on his mobile phone. What's surprising and shocking is it works on my 'machine'.

I used someone else's mobile phone as Ahmad requested, and it worked. No issues. I was confused seriously , my first experience with this I will say.

Unlike PCs/laptops, accessing a mobile phones' browser console ( this would have made it very easy to see what's wrong ) wasn't that straightforward. Ahmad tried, at his end, to access his browser's console but to no avail.

After making some searches, I came across the saviour, Eruda 💯.

Eruda made it possible to view Ahmad's browser console ( just added some scripts to my code ).

To my surprise, the .at() method I used in my code wasn't recognized on Ahmad's browser.

Really a wow moment for me because all these while, I was suspecting the codes involved in generating the charts.

To resolve this, I resorted to using the common way of accessing the last element of an array:

array[ array.length - 1 ]

That was the reason why I used the .at() method at first. On deploying my fix, the web app worked fine on Ahmad's device.

In all, I came to realize the need to confirm the Browser's compatibility with some language features obvious before use. ( sites like caniuse can be useful)

Also, I think generating polyfills using npm packages like corejs could have helped too.

Frontend vs Backend Collaboration and Challenges

Ahmad's POV

When I saw the Notification about the new Hackathon, I initially wanted to build and submit a REST API. That's what I know how to do. However one of the requirements was a nice User Interface ( UI ). So for a backend guy, I need a frontend guy.

I have never worked with a front-end team or collaborated on a project with a front-end team. It's usually solo for me.

Collaborating with Abass was a nice adventure and an eye-opener. He is a good communicator and very good at what he does. When I told him about the Hackathon and he agreed to collaborate with me, we had a quick session to discuss what we were going to build and how we are going to build it. We agreed on building a Minimum Valuable Product that will still show the core features of the web application.

The journey was without challenges and some of them are highlighted below:

When I deployed on Digital Ocean, It was insecure. It was using HTTP rather than HTTPS.

Our first major challenge was this:

“The page at shortenr.vercel.app was loaded with HTTPS but requested an insecure XMLHttpRequest endpoint http://134.122.124.219 this result has been blocked. The content must be served over HTTPS.”

Obtaining an SSL certificate to secure the endpoint was not an option for me. I had to redeploy on Heroku.

The second major challenge was Cross-Origin Request Related Issue:

source - Pinterest

source - Pinterest

When I supplied Abass with the API, I intended to allow all originators to access the Endpoint since he had to access the Endpoint from his localhost and on his frontend remote server.

He told me he was getting the issue below:

I was confused because I set the CORS configuration and I allowed all origins.

I had to go and re-read the documentation of the django-cors-header module to see if there was something I didn't do right. I did everything right or as I thought. After about an hour of tweaking, and asking him to retry it didn't work:

It turned out it was a simple syntax-related issue 😂. I wrote:

CORS_ALLOW_ORIGIN : True

Instead of:

CORS_ALLOW_ORIGIN = True

We finally got another error, it was a relieving moment. Sign of progress isn't it 😂?

The new error was an easy fix, I was missing a comma in my list of middlewares.

Abass pov

Yeah, collaborating with Ahmad was really a nice one as he really is good at what he does. Though some challenges such as CORS issues he mentioned above came up, the regular back-and-forth communication we had during the course of this project really goes a long way into getting this far. Personally, I was able to learn a tonne from this collaboration.

How we built shortenr

Ahmad POV

I built Shortenr Backend with Django and Django Rest Framework.

We made a decision not to use Django's Model View Templates ( MVT ) approach because we wanted a separation of concern and we want both the Backend and Frontend to scale and have a level of independence.

I provided the Rest API endpoint that was consumed at the frontend and I used the JWT token for authentication.

I built the API whilst considering the API best practices.

The API is likely to evolve, the schema might change while adding more functionalities. To prevent this from breaking the front end that consumes the API, I made sure to employ versioning. The current URL endpoint version is still version one.

I made sure that the Link endpoint to retrieve the Link resources allows pagination, filtering, and searching for easy accessibility of resources at the frontend.

The short alias that I created for the user's long link used the md5 hash algorithm for encoding. The md5 produces a 128-bit hash value which is about 32 characters for each link in which we take only the first 6 characters as an alias for each link.

What's the likelihood of collision ( an alias mapping to multiple links )?

The 6 characters alias are made from the combination of 26 capital letters and 26 small letters of the English Alphabet and 10 Numbers ( 0-9 ) with the possibility for repetition.

62 letters can be in the first position of the 6 letters.

62 letters can be in the second position also( since there is room for repetitions )

So generally, 62 letters will compete in all the 6 positions, meaning we are going to have:

62×62×62×62×62×62=62⁶

Which is about 56,800,240,000 ( Fifty-six Billion, Eight Hundred Million, Two hundred and Forty Thousand ) possible combinations.

The probability of multiple links having the same short alias is significantly very low.

The hash value that is produced is based on the string combination of the long URL the user submitted and the user's email. This will ensure multiple users can create an alias for the same long URL.

In case the user submitted a link they have in their collections, the previous URL will be returned.

I set up a Cron Job with Celery using Redis as a message broker to find and delete all links that have not been visited in or more than the last 10 days. This will ensure that stale links do not take up space in our database.

Abass POV

Shortenr's frontend was built majorly with NextJS with Typescript alongside Tailwind CSS .

A layout component was created for the general layout common to the pages while these pages are passed as children to it. The appearance of some components in the layout component was based on the route the user is currently.

Global states such as logged-in users were managed using React Context API.

Data fetching was made using axios and useSWR hook.

Persistent user session was possible via request interception using Axios request interceptor which checks for the validity of login token and makes an automatic request for another once expired or invalid.

The charts were created using the Chart.js library. A function (buildChart) was created to take in the details of a particular chart and its id as parameters and use this to generate a corresponding chart.

Most of the features of Shortenr UI exist as components that get composed on whatever page they are needed.

Optimization Techniques

Back end

The real problem is that programmers have spent far too much time worrying about efficiency in the wrong places and at the wrong times; premature optimization is the root of all evil (or at least most of it) in programming. —Donald Knuth

I don't really worry so much about optimization in the backend. However, due to the expensive query that I performed to get the analytics, I optimized the query using the Django's prefetch and select_related. This will fetch and join all related tables in a single query call.

Also, I indexed the fields that are frequently searched and queried to reduce their database access load time.

Why not caching?

Caching, if not done appropriately, we risk serving stale content to the users. The contents that are served to the frontend are dynamic and are bound to change quite often. I didn't implement caching so as to ensure stale analytics are not rendered.

However, this could be carefully implemented in the future.

Another optimization technique I am looking forward to implementing is Database Replication. I will set up multiple databases where one of them will be for read operations while the other for write operation. This will reduce the query time for the database.

Using the Planetscale database itself is a form of optimization. The data access from the database was surprisingly fast.

Frontend

In particular, I prevented making redundant requests by taking advantage of the Caching and Request Deduplication feature that SWR provides right out of the box and also with its Optimistic Data feature, I was able to update local data while revalidation is being made. This made updating local data quicker as soon as possible rather than waiting for revalidation before updating.

Also, I made sure that states are closer to where they are really needed in order to prevent unnecessary rerenders.

The Pages

The Signup page:

The Activation notification page:

The Login page:

The Homepage for unauthenticated users:

The Homepage:

The Analytic Dashboard page:

The Delete account page:

The Structure of the Payload

The Swagger Documentation

Using Planetscale

Using `Planetscale was quite interesting and not to stressful. They had a really nice documentation to help you do quite anything with their database right from connecting to creating a deploy request.

The branching and deployment request was quite handy. It allowed us to have the development branch where we changed the schema frequently without having to mess up with the production. We just had to create a deploy request and merge the development database to the production database.

The Links

Frontend Only Github Repository

Video Demonstration

What inspired up

The development of shortenr was inspired by the potential value perceived in being able to not just generate an ordinary short URL for a long one but get a number of analytics to track link's usage. In attempt to just shorten a link , one is able to get more than just that.

This has the potential of helping business owners to get an insight about their customers or let people know how well the adverts or campaign they set on advertising platform converts.

Todo and Future Plan

We decided to build a minimum valuable product that shows what our application can do. There is still a tonne of things we want to do like:

•Allow users to add custom short links

• Allow users to specify the date their link will expire

• Allow for more Authentication related operations in the frontend

• Render the per week analytics in the frontend

• and lot more

Conclusion and Appreciation

The whole experience has been really really really interesting and it was really worth it😉

Finally, we show appreciation to Hashnode and Planetscale for giving us this opportunity to learn and build!